-

Medical code classification based on free-text clinical notes

(09/2023)

Guillaume Kunsch, Mickael Assaraf, Alexander Belikov

[Thesis]

TL;DR: Thesis on medical code

classification under the supervision of Dr Alexander Belikov.

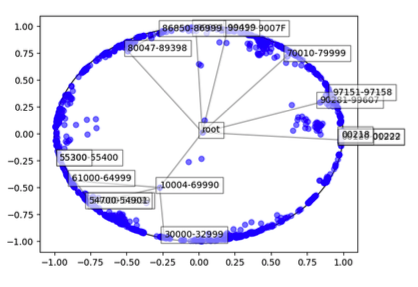

We use RoBERTa-PM to encode the clinical notes on top of which we create a

custom hierarchical decoder to beat current SOTA on rare codes.

Besides, we hinge the potential of out-of-distribution data in

training and the structure of hyperbolic space for representing

codes hierarchy.

-

Deep RL for multi-agent interaction (03/2023)

Marc Schachtsiek, Guillaume Kunsch

[Code]

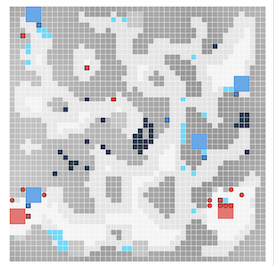

TL;DR: A Deep Reinforcement Learning project for

Lux AI

challenge about multi-agent optimal policy for survival in a hostile

environment. This was on open project where we had to design our own

loss function based on multi-objectives in the game, and find a way

to handle the multiple instability due to large learning space. We

applied concepts seen in the course of theoretical RL and deep RL

such as PPO, TRPO or AC2 and optimize for agents behavior against an

opponent. Interestingly, a combination of rule-based + RL-based

behavior performed better both in our case and at the competition

level. A reminder that ML is not always the best answer.

-

Automated essay scoring (03/2023)

Hippolyte Gisserot, Guillaume Kunsch

[Code + Report]

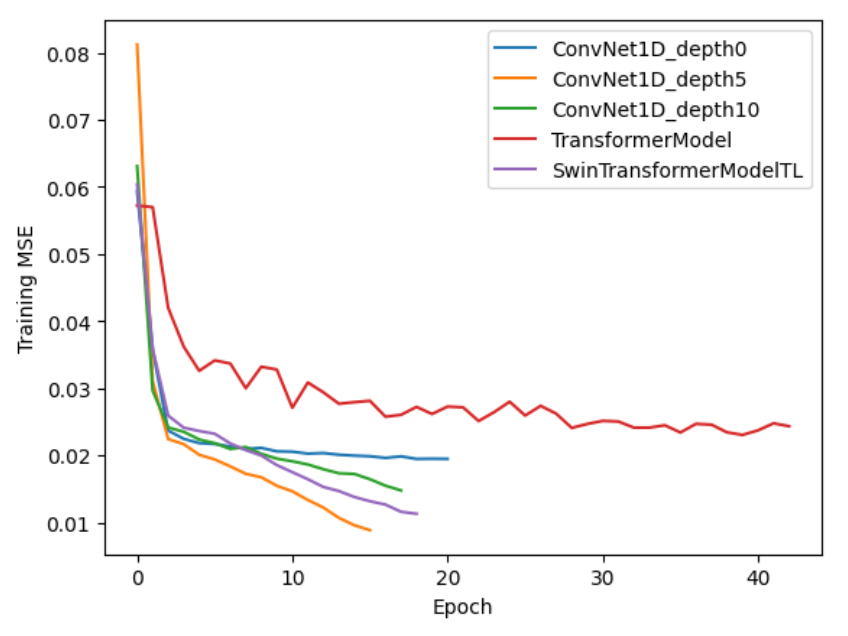

TL;DR: A quick project done during NLP class with Hippolyte

Gisserot. We benchmark several models - LSTM, CNN, Transformers - on

the Kaggle

ASAP

challenge dataset, whose goal is to give a mark for an essay based

on its content. We use historical word-level tokenization methods

such as Word2Vec and GloVe. Interestingly, without any

hyperparameters tuning, CNNs performs on par with the best

Transformer model.

-

Adversarial attacks for image classification (01/2023)

Benjamin Sykes, Guillaume Kunsch, Hippolyte Gisserot

[Code + Report]

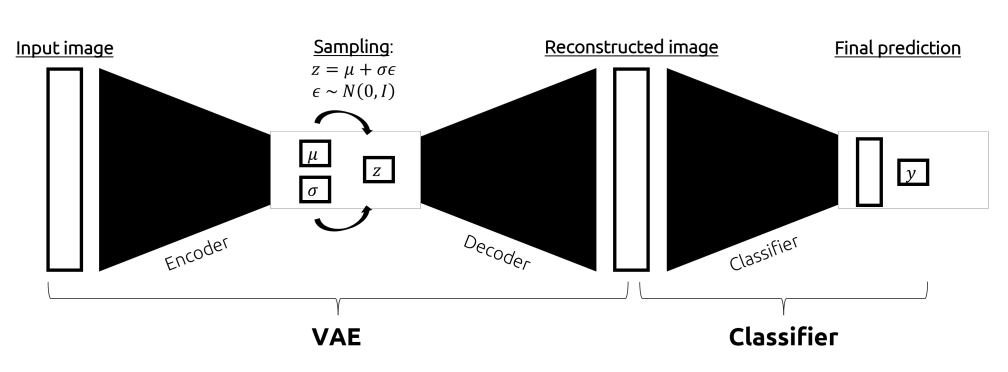

TL;DR: In this work, we study the robustness of neural

network classification models to adversarial white-box and black-box

attacks. We implement the Fast Gradient Sign Method (FGSM) and the

Projected Gradient Descent (PGD) attack and evaluate their

performance on CIFAR-10. We also study the impact of adversarial

training on perturbed images and the way to leverage a VAE as a way

of introducing noise.

-

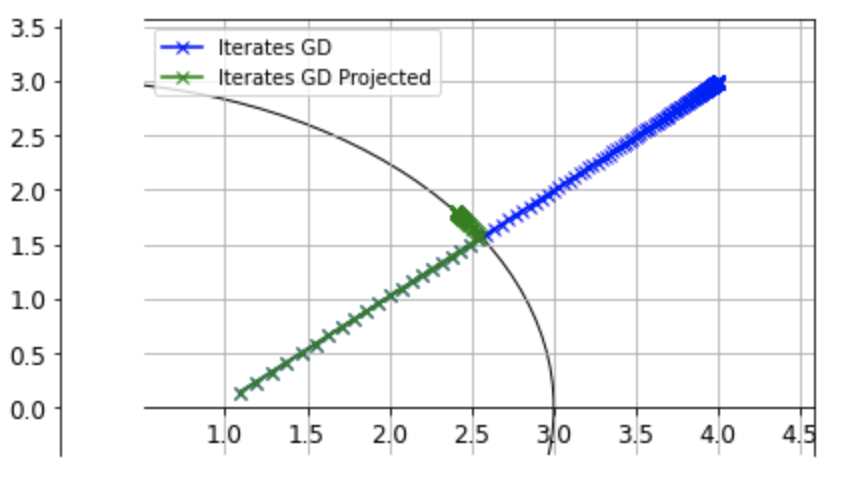

Optimization for ML (12/2022)

Guillaume Kunsch

[Code]

TL;DR: A collection of notebooks implementing various

algorithms whose theoretical performance was studied in the course

Optimization for Machine Learning given by

Gabriel Peyré. Algorithms

implemented: SGD, some Autodiff from scratch, PGD, Proximal

Gradients, Heavy Ball, Nesterov, ...

-

Quantitative study on the relationship between cryptocurrencies

and social media flows (06/2021)

Guillaume Kunsch

[Code + Report]

TL;DR: Thesis supervised by Prof. Mira McWilliams .

We use the Twitter API to collect real time tweets related to Bitcoin

and apply sentiment analysis on them. We then use this data to

predict increase/decrease in Bitcoin's price. Interestingly, volume

of tweets seems to be a better predictor than sentiment in most time

frame.